Member-only story

How to Run DeepSeek-R1 Locally on Your Computer

Did You Know You Can Run DeepSeek-R1 on Your Own Computer? Here’s How: A Step-by-Step Guide

If you are not a member, read the full article here!

It’s 11:30 p.m. as I write this. I’m working on a workflow automation and required some assistance from DeepSeek-R1 with some challenging problems. But sadly, it continues saying “The server is busy; please try again later.” I am becoming impatient and frustrated.

You’ve also been in this situation before, correct? So, let’s tackle this problem by running the model on our local computer. But how?

Trust me, it’s a simple three-step process that should take you no more than 15 minutes.

In this article, I will not simply download the model and begin asking it in the terminal because that is not very user friendly, and we need a UI like ChatGPT where we can enter inputs and get results in a very visually appealing manner.

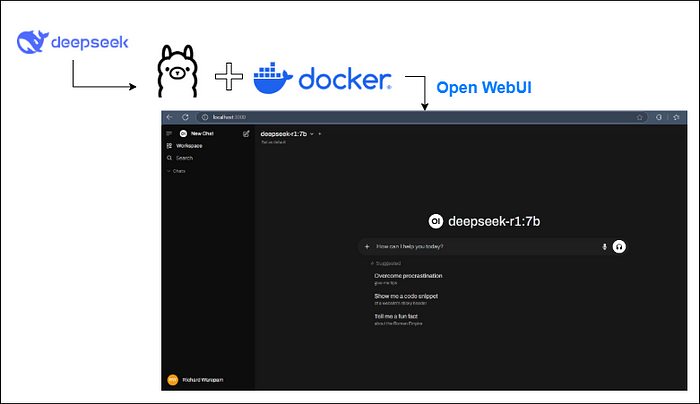

Doesn’t that sound great? Here’s how we plan to do it:

- Install Ollama, which allows us to download any model and work with it locally on our computer.

- Then, using Docker, we will install an Open WebUI that delivers a browser-based chat experience. So, we need Docker…